ChatGPT – the AI-powered chat bot developed by Open AI – is all the rage right now! Ever since its launch in November 2022, ChatGPT has taken over the internet because of its surprising ability to deliver well-formed answers to complex questions, compose essays, write poems, and even generate code. The natural language processing tool has been called a game changer, captivating public imagination and fueling conversations about the future of artificial intelligence.

While the technology will likely have a big impact on several domains, in this blog post, we want to explore the cybersecurity implications of ChatGPT. Is the technology a threat to cybersecurity or is it an asset that can help cyber professionals enhance defenses?

Is ChatGPT a cybersecurity risk?

While ChatGPT has become everyone’s new favorite tool, many IT professionals are raising concerns about the cybersecurity risks of this technology.

Blackberry surveyed 1,500 IT decision makers from North America, UK, and Australia and found that 51% believe ChatGPT will enable a successful cyberattack in less than a year.

%

of IT professionals believe ChatGPT will enable a successful cyberattack in less than a year

Experts worry that hackers may misuse ChatGPT to write malicious code or phishing emails. Here, it’s worth mentioning that OpenAI model behavior guidelines for ChatGPT disallow creation of “content that attempts to generate ransomware, keyloggers, viruses, or other software intended to impose some level of harm.” The company has controls in place to prevent malicious use of the chat bot.

However, security researchers have already found evidence of hackers experimenting with the tool to develop malware. Check Point Research analyzed underground hacking communities and found three instances of hackers using ChatGPT to recreate malware strains or develop Dark Web marketplace scripts. In one instance, a tech-savvy cybercriminal shared code he created for a Python-based information stealer.

Another hacker noted that OpenAI gave him a “nice helping hand to finish the script with a nice scope” for his alleged first-ever Python script. Some researchers see ChatGPT as an assistant to human expertise, suggesting that expert authors play a key role in “prompting the model to write, and crucially, correct the code it generates.” Others, however, believe that ChatGPT will democratize cybercrime.

A report by Insikt Group noted that, “ChatGPT is lowering the barrier to entry for malware development by providing real-time examples, tutorials, and resources for threat actors that might not know where to start.”

Besides malware, hackers might use the tool for phishing

Phishing is one of the most common and popular attack vectors used by cybercriminals. Poor or grammatically incorrect language is often a key red flag of phishing. But ChatGPT’s ability to write coherent, almost human-like content could help cybercriminals craft convincing phishing emails. This, in fact, is the top global concern for 53% of the IT professionals surveyed by Blackberry. ChatGPT might make it less time intensive for a hacker to create a legitimate-sounding phishing email, especially for bad actors that may not be fluent in English.

But it’s not all bad. ChatGPT can also help cyber defenders.

While ChatGPT does have its cons, the AI-driven tool can also be a great resource for cybersecurity professionals. Since the chat bot seems to understand how to read and de-bug code, ethical hackers and security teams could potentially leverage ChatGPT to detect a threat.

Cyber defenders can also use the tool to generate sample code for penetration testing, write sample information security policies, and assist with security incident analysis to help build strong cybersecurity programs and speed up incident response turnaround time.

ChatGPT’s capabilities could further help ease the burden on short-staffed security teams that are coping with the challenges of the ongoing cybersecurity talent shortage.

Takeaway for your business

Like any new technology, ChatGPT has its pros and cons. Based on who is using the tool and what this person’s intentions are, the chat bot can be a security threat or an asset. As GraVoc’s Mike Kannan, Director of IT Assurance & Security Research, noted:

“ChatGPT and other AI tools will allow security practitioners and the dark-side alike to really push testing to new limits, ranging from innovative ways to phish someone to creating brand new techniques across to developing entirely new companies.”

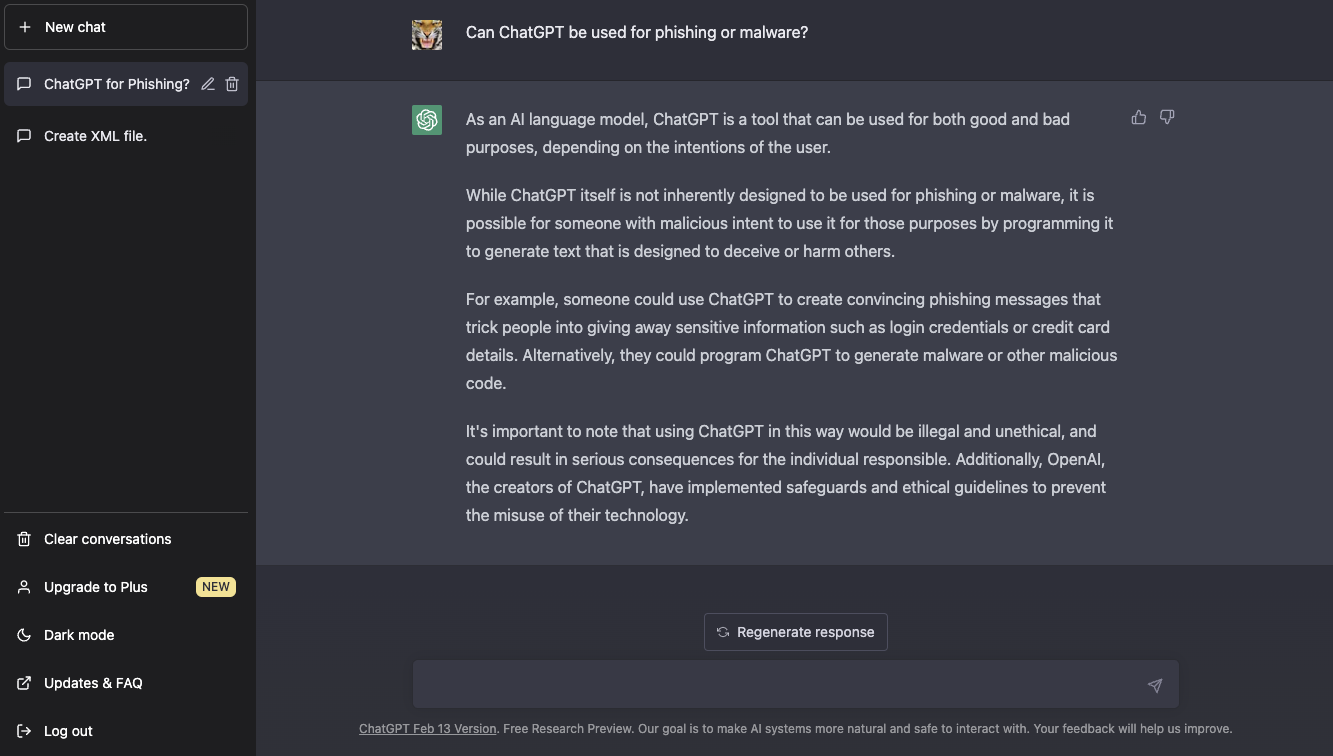

We also thought it would be interesting to ask ChatGPT whether it can be used for phishing or malware, here is the chat bot’s response:

Ultimately, businesses should be prepared to address the security risks of ChatGPT with employees to encourage caution as they experiment with the tool to leverage its capabilities. Through regular security awareness training, businesses can ensure employees are educated about the downsides of the tool and how it could be misused for malware and phishing. Businesses should also maximize network and endpoint protection as well as conduct consistent assessments, such as penetration testing and adversary simulation, to ensure strong cyber readiness and resilience.

looking for cybersecurity solutions?

Our cybersecurity team can help prepare your business to mitigate the security risks of ChatGPT. Click below to learn more about our information security services or contact us today to get started!

Related articles

Change Healthcare Attack: Ransomware Protection Measures for Healthcare Organizations

In light of the Change Healthcare attack, we explore why hackers target healthcare and how healthcare can defend against ransomware.

GraVoc Recognized on CRN MSP 500 List for Second Year in a Row

For the second year in a row, GraVoc has been recognized on the CRN® MSP 500 list in the Pioneer 250 category!

PCI SAQ Types: Which SAQ is Right for Your Business?

In this blog post, we provide an overview of the SAQ types for PCI DSS v4.0 and how to select a PCI SAQ that’s right for your business.